3 Geo-enrichment Insights Supporting Data Science – Automating Processes and Spatial Intelligence Using Cloud Technology

Following the first blog in this series about location determination, this second blog continues to explore the benefits geo-enrichment can provide using big data and cloud native technologies using location determination, feature selection, and data extraction. Learn more about how leveraging spatial intelligence with cloud computing power for complex geospatial and routing processing will help efficiently organize and manage data, save your data science teams time, deliver data you can trust, and enable accurate insights for faster, more confident decision-making.

Overview and summary

Identifying where customers are located is critical to spatial intelligence and creating a high-quality, operational geo-enrichment process. Several under-utilized spatial processing and routing tools can easily add value to automated Location Intelligence (LI) processes.

In this blog, we’ll describe how to extract value from traditional GIS data. Using these tools to understand geospatial relationships, for instance, finding points nearby, can add meaningful context for your business decisions. We’ll explain how easy it is to combine geospatial processes into a sequence of steps that bring more geospatial data into automated business processes. Let’s dive into how to get more value to your business operations through:

- Feature Selection

- Data Extraction

- Routing polygons or catchment areas

Feature selection

Select areas, points, lines, by distance, and location IDs

Feature selection is the second of four keys to geo-enrichment. Location Determination is the first key and defines where customers are located. (Details about Location Determination can be found in the first blog.)

Feature selection is the geospatial query process used to identify relevant geographic features for more insight. Typical geospatial features are:

- Areas – the selection of areas involves determining what 5G service area customers within, or what crime risk region, or what 5-mile catchment area around a delivery service location. Precisely uses a Point-in-Polygon operation to select areas.

- Points – point selection typically identifies the nearest business, risk, or customer locations. Finding the nearest schools, fire stations, and how many homes are within 20 miles. These are examples of how Precisely’s Find Nearest query is used in Spark.

- Lines – the Find Nearest query can also be used to select the closest linear features. Bus routes, optical fiber cables, fault lines and gas lines can be identified. Information from these features is added to automated processing to determine services and risk factors. Even street network features can be used to determine if a location is near an intersection or on a narrow urban block.

- Distance – how far to search is an important factor for Point-in-Polygon and Find Nearest queries. Due to the high compute requirements for these kinds of searches, most organizations don’t search very far. But with distributed compute and the ephemeral nature of Spark and Kubernetes larger search areas can be used with less time and cost. We can incorporate risk factors from bigger areas and estimate impact of customer behavior from farther away and with more depth.

- Location IDs – are used like points because they are tied to address points like the PreciselyID or to small areas like geohashes. They can be selected using the Find Nearest query but the more prevailing use in Spark environments is when they are selected with surrounding areas. Answer questions like, “How many light industrial businesses are within the census block containing this location?” They can also be used in simple distance searches using the Find Nearest query to answer questions like, “How many sinkholes are within 3 miles of this house?” However, the most interesting questions are using human mobility boundaries as the containing areas for queries with location IDs and other points.

While each of these features can be processed with separate tools from various providers, the ability to have them all in one resource for Spark or Kubernetes enables organizations to quickly integrate these queries together and with same access standards. This approach saves organizations time and money because there is no longer a need to integrate point in polygon from one library and then a points of interest search tool from a different vendor.

Download our white paper

Enabling Location Intelligent Insights Using Cloud-Native and Big Data Technology

Companies struggle to generate positive returns on big data implementations. Many find it difficult to generate actionable insight from their big data assets. Geospatial processing changes the dynamics.

Data extraction

Extract attributes, calculate + measure, compare + combine

Data extraction is the third of the four keys to geo-enrichment. Extracting attributes is the basic form of data extraction. However, the other two options (calculate + measure, compare + combine) are often overlooked or not used because organizations are focused on simple insights from location intelligence information. This is due to the operational challenges of automating input from complex spatial information. For example, calculating elevation change of the ground to the closest flood zone or counting how many homes are within 20-minutes from every house in the country are hard enough using a desktop GIS. They were practically impossible for automated processes with billions of customer locations.

The data extraction key is often reduced to simply getting feature attributes. For example, the name of a neighborhood, the type of a flood zone, or a business industrial code. However, the following information extraction can be done with scaled and ephemeral compute using Spark and Kubernetes:

Calculate + measure – this is more than finding distances, calculating areas, determining heights, and adjacency for questions like:

- “How much of this property is below a 12-foot storm surge?”

- “How many floors can get the 5G signal if the antennas are in these locations?”

- “How many structures within 20-feet of this building and how far away are they?”

Compare + combine – allows organizations to extract geospatial information and compare it. In the questions listed above multiple geospatial facts are compared. The elevation of the property is extracted from property attributes and compared with LiDAR elevations. This creates better insight about elevation changes on a property. LiDAR elevations can be combined with building height information to determine how tall they are.

While these may seem like simple questions, they can be very hard for organizations to answer in automated processes. The large datasets and compute requirements don’t fit into existing business processes. However, with Spark and Kubernetes these processes can be done in scaled environments and use compute only when it is necessary. This opens up the use of more complicated calculations and geospatial data will add new insight.

Routing for insight

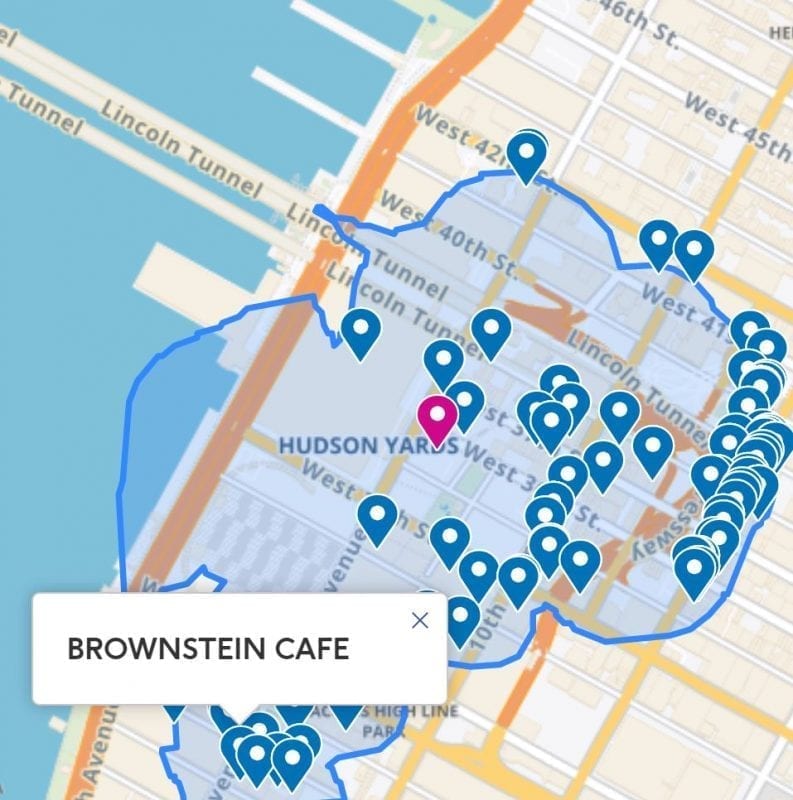

Feature selection and data extraction are even more impactful when routing technology is used because human travel behavior is more closely modeled. In Spark the Spectrum Spatial Routing SDK is used to build catchments (or routing polygons.) Catchments are created with drivetimes or drive distances from locations and are expensive to generate and store. So, they’re often not directly used in business operations or stored. Now we can use automated and scalable Spark queries to help determine investment decisions that reference catchments:

- “How many potential customers are within a 5-minute drivetime of this elementary school?”

- “How many brand-X coffee shops are within a 7-mile driving distance of all of the single-family homes in this state or postal code?”

Summing it up: Next level geo-enrichment

Organizations have more data than ever before but organizing, bringing context, and evaluating that data over large volumes of records is tough. Additionally, digital business operations are rising quickly, and most businesses have automated between 70% – 80% of their processes. Leveraging both location intelligence data and technology provides the most accurate insights and helps organizations take on digital transformation successfully.

Cloud native and Spark spatial processing tools can greatly help organizations with siloed geospatial data as well as data science teams that want to integrate more GIS data from outside an organization. Using tools carefully designed to use geospatial reference data, Spark and Kubernetes technology, and data science and analytical models, quickly find what is nearby and save both time and money in large scale analytics.