Poor Data Quality is Pervasive, Impacting Trust in Data and Success Across Data Programs

The results are in! The 2023 Data Integrity Trends and Insights Report, published in partnership between Precisely and Drexel University’s LeBow College of Business, delivers groundbreaking insights into the importance of trusted data.

For the report, more than 450 data and analytics professionals worldwide were surveyed about the state of their data programs.

One major finding? Low data quality is a pervasive theme across the survey results, reducing trust in data used for decision-making and challenging organizations’ ability to achieve success in their data programs.

Let’s explore more of the report’s findings around the challenges and impacts of poor data quality.

2025 Outlook: Essential Data Integrity Insights

What’s trending in trusted data and AI readiness for 2025? The results are in!

Quality issues dominate the data discussion

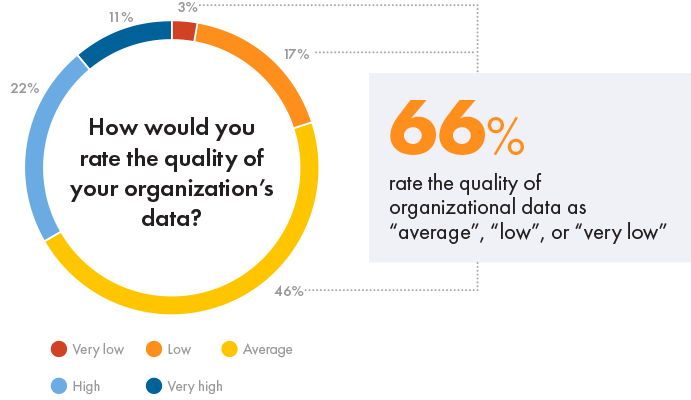

The 2023 Survey of Data and Analytics Professionals, from the Center for Business Analytics at Drexel University’s LeBow College of Business (LeBow) in partnership with Precisely, revealed that poor data quality is pervasive, with 66% of respondents rating the quality of organizational data as “average,” “low,” or “very low.” Moreover, poor data quality has a ripple effect across data programs and the business itself.

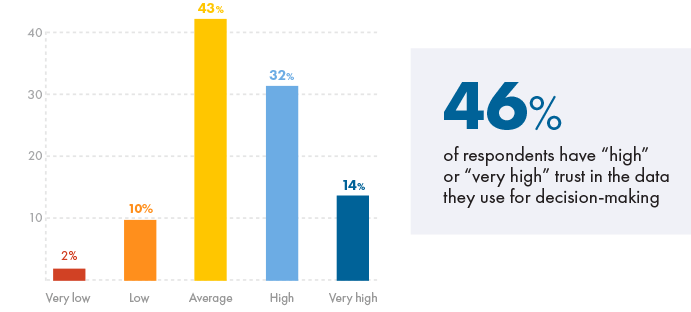

Given that 77% of respondents say the leading goal of their data programs is data-driven decision-making, the impact of low-quality data is strongly seen in the trust placed in data used for making decisions that shape the business.

More than half of respondents (55%) rate their organization’s ability to trust the data used for decision-making as “average”, “low”, or “very low”. It is unsurprising that 71% of respondents report that their organizations spend 25% or more of their work time preparing data for reporting and decision-making.

Obstacles to achieving high-quality data

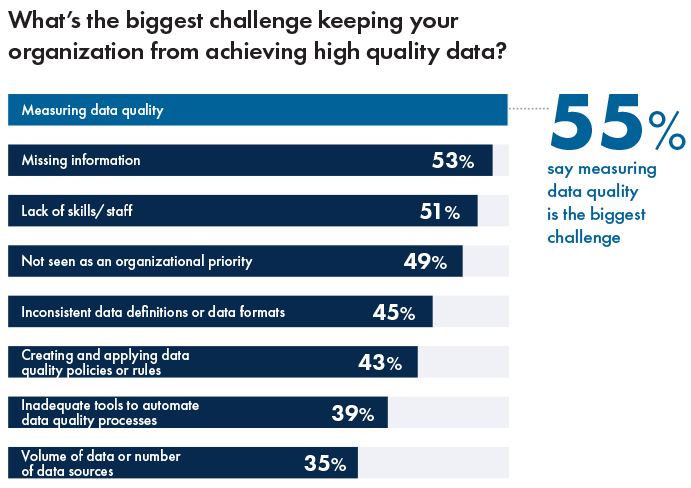

Focusing on those respondents who rated the quality of their organization’s data as “low” or “very low”, we find that measuring data quality (55%), missing information (53%), and lack of skills/staff (51%) are their most significant challenges to achieving high-quality data. Data quality-related roadblocks include inconsistent data definitions or formats (45%), challenges in working with data quality policies or rules (43%), and inadequate tools for automating data quality processes (39%).

While data quality and data governance policies and software can address these issues, making those investments requires an organizational commitment to data quality. However, almost half (49%) said that their companies did not see data quality as an organizational priority.

The ripple effect: Impact of poor data quality on the success of data programs

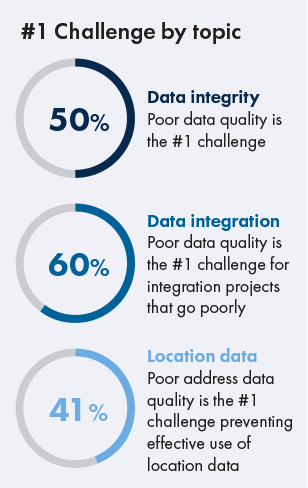

Overall, 36% of respondents said that poor data quality is a challenge to the success of their organization’s data programs. However, for organizations with low trust in the data they use for decision-making, data quality is the greatest challenge to success for 70% of respondents — not a surprising correlation. When we take a narrower look at challenges to success, data quality captures the leading role:

With the pervasive nature of data quality as a significant issue for organizations today, we are seeing a shift in priorities. During the big data boom, data leaders’ biggest concern was volume. Now that dealing with massive volumes of data is the new normal, data leaders focus on the quality of the data used to run the business.

Get inspired for your data integrity journey

How does your data program compare to your peers? Do you have the data integrity needed to achieve and exceed your business goals? For more insights and inspiration for new use cases with trusted data, read the full 2023 Data Integrity Trends and Insights Report